|

Calibration Method between Markerless Motion Capture and Optical Motion Capture |

|

Summary

There is a growing need for human body measurement using markerless motion capture. In this study, a markerless motion capture system using six calibrated RGB cameras was used to achieve measurements equivalent to those of optical motion capture systems using markers. One of the issues with MV-OpenPose is that the coordinate space used in MV-OpenPose is different from that of common optical motion capture systems. Therefore, the purpose of this study is to convert the coordinates of each joint acquired with MV-OpenPose into the coordinate space of an optical motion capture system to perform positioning.

MV-OpenPose

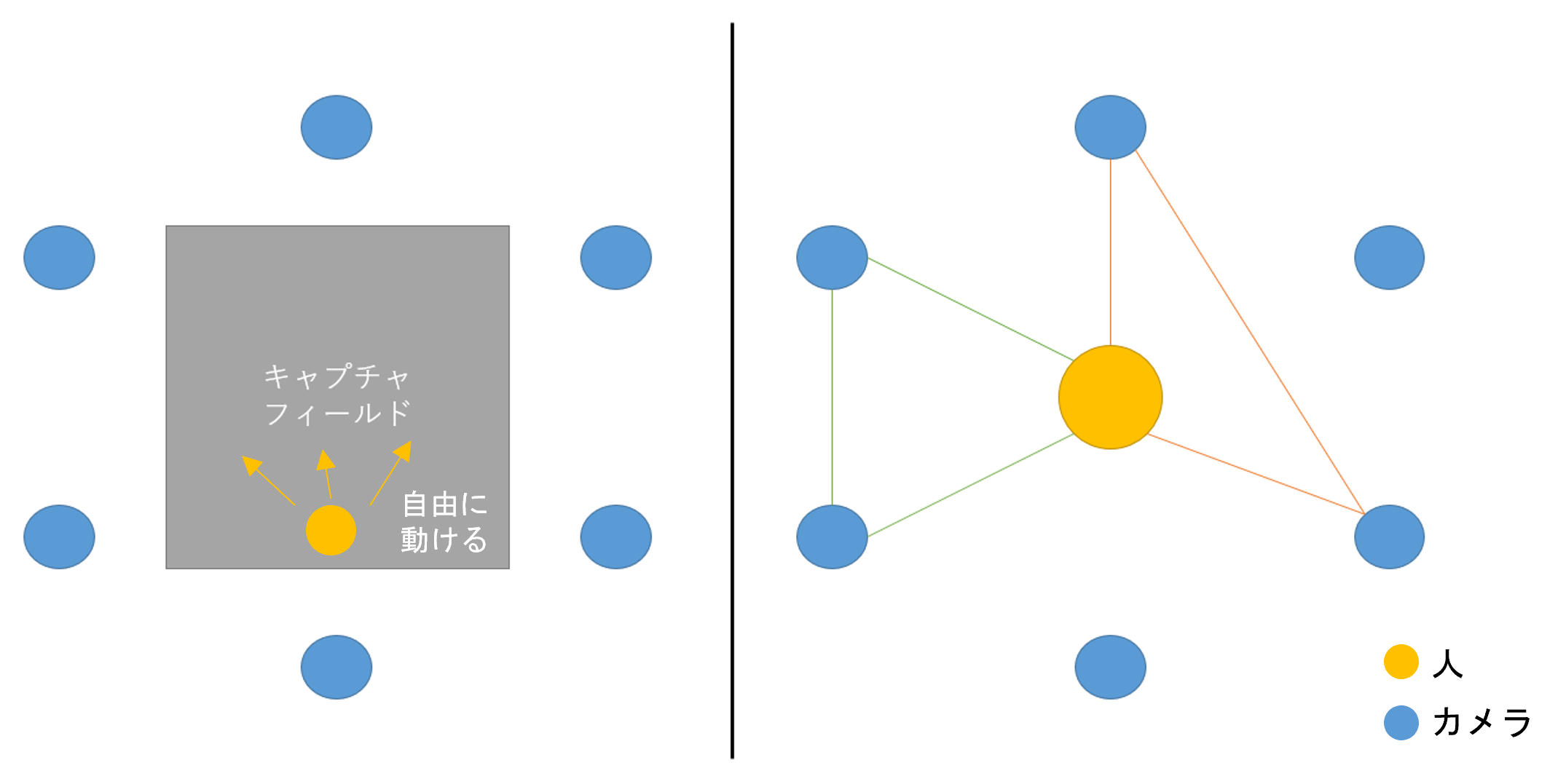

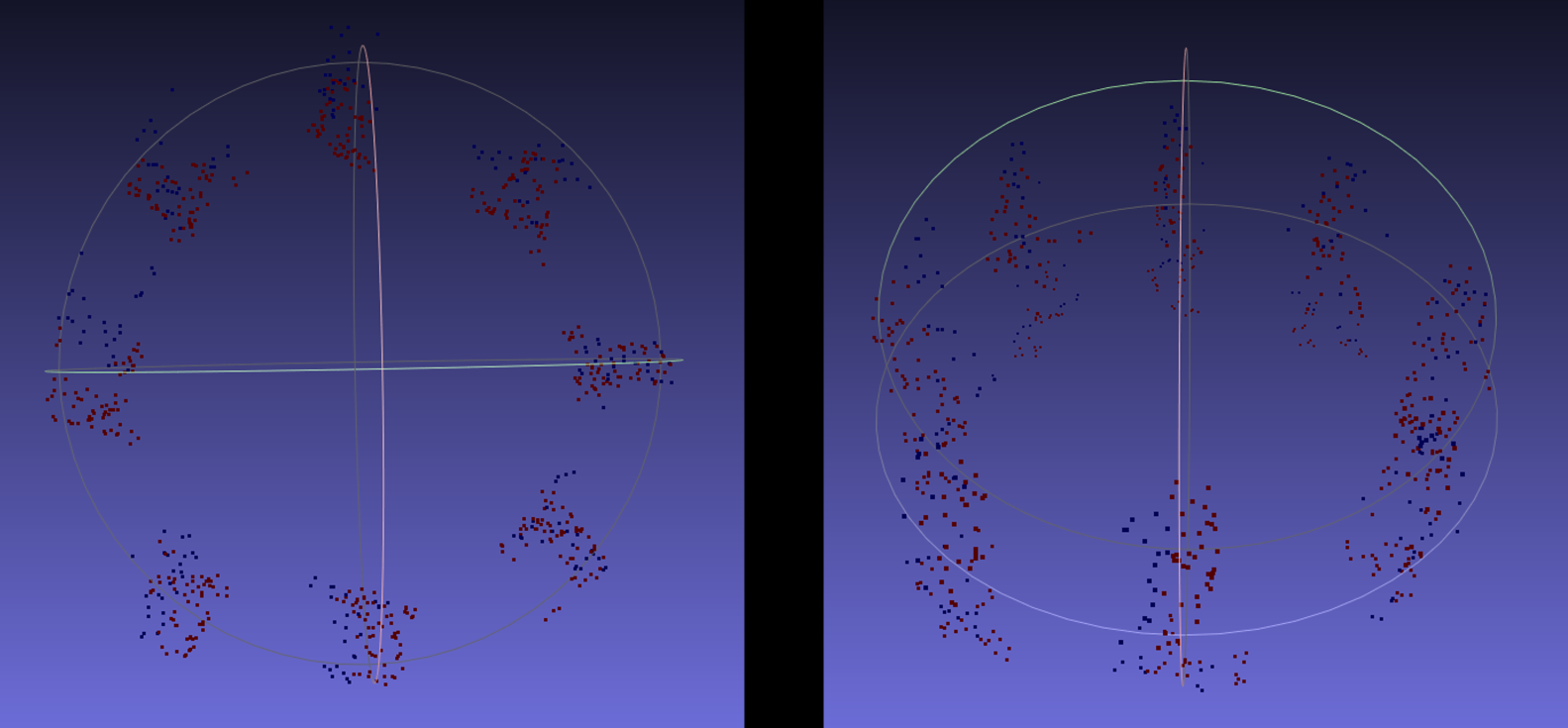

MV-OpenPose is a motion capture system using six RGB cameras without markers. As shown in the figure below, the cameras are arranged to surround the object to be captured and estimate the coordinates of each joint in three dimensions by using the principle of triangulation. The accuracy of the measurement can be improved by using the average value of the 3D points estimated by triangulation even with one camera.

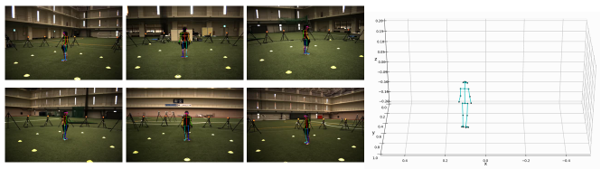

The figures below show actual motion capture using MV-OpenPose and an example of captured results. ICP Algorithm

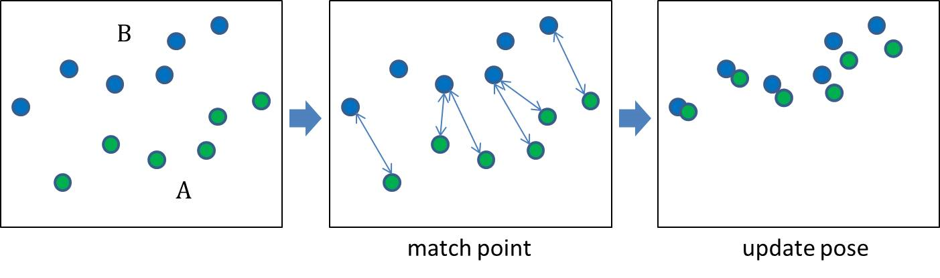

The proposed method utilizes the Iterative Closet Point (ICP) algorithm, which is a method for adjusting the position-posture relationship of two point clouds so that they are aligned, by iteratively adjusting the position-posture relationship step by step based on repeated calculations.

Procedure of matching between two motion capture

The positioning procedure consists of manual rough positioning to set the initial ICP values and detailed positioning using the ICP algorithm.

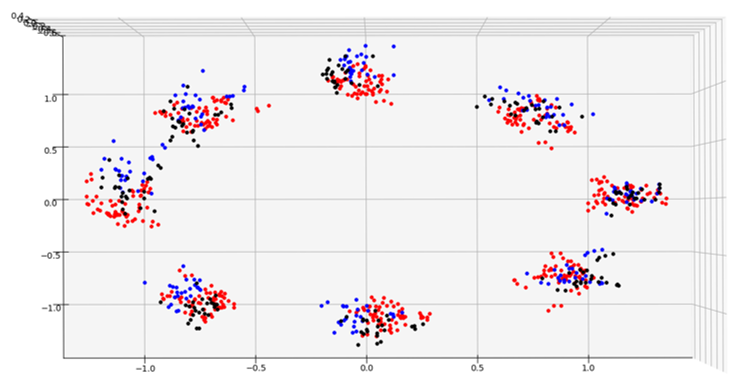

(Red: optical motion capture, Blue: MV-OpenPose before ICP, Black: MV-OpenPose after ICP)

Experimental results

The alignment of the two motion capture systems using the proposed method was verified in two situations. The first is a slow, circular walking motion. The second is an acrobatic movement, such as standing on one's head and spinning around. The two motion capture data are in good agreement despite the complexity and intensity of the movements, such as standing on one's head and spinning. However, compared to the first situation, the discrepancy is larger, and this verification confirmed that some frames in the MV-OpenPose did not detect the toes, or that they were clearly estimated in the wrong position.

Resources

Publications

|

| Computer Vision and Graphics Laboratory |