|

Underwater image enhancement by DNN |

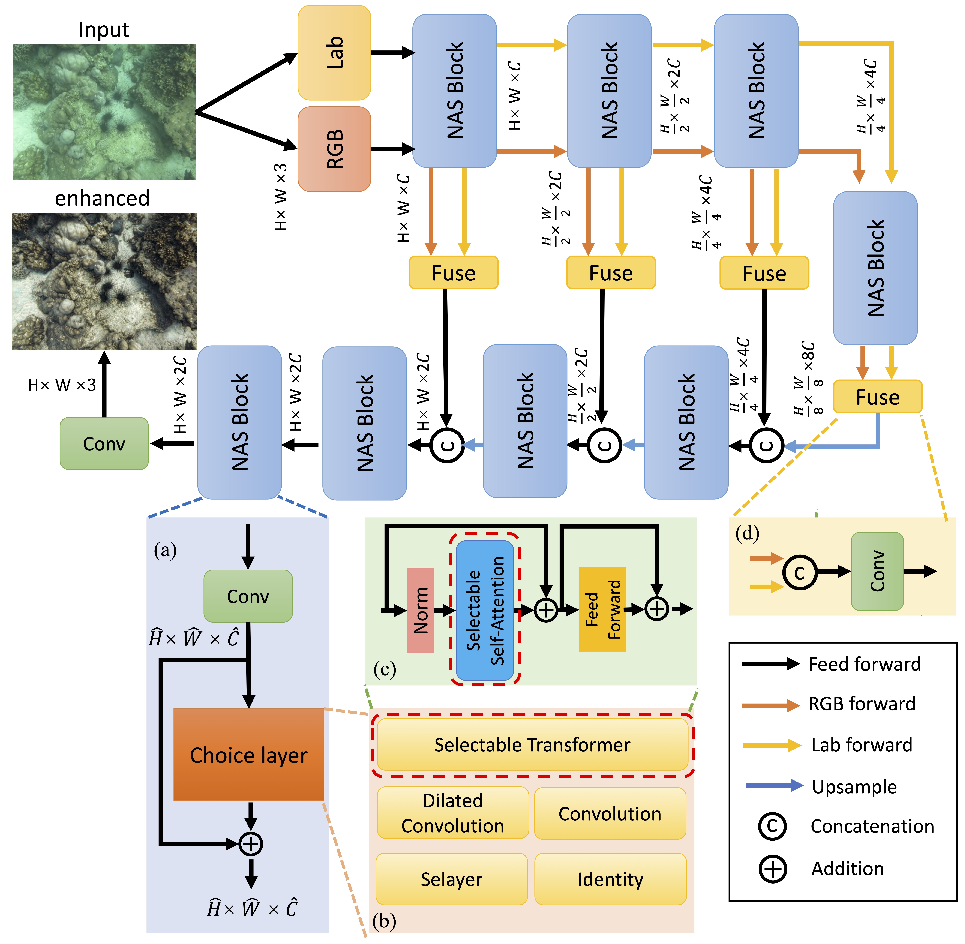

AutoEnhancer: Transformer on U-Net Architecture search for Underwater Image EnhancementMost deep learning-based approaches have successfully introduced different structures and designed individual neural networks for this task, these networks usually rely on the designer's knowledge and experience for validation. In this paper, we employ Neural Architecture Search (NAS) to search for the optimal architecture for underwater image enhancement, so that we can obtain an effective and lightweight deep network. To enhance the capability of the neural network, we propose a new search, which is not limited to common operators, such as convolution, but also transformers in our search space. Further, we apply the NAS to the transformer and propose a selectable transformer structure. The multi-head self-attention module is regarded as an optional unit, thus deriving different transformer structures. This modification is able to expand the search space and boost the learning capability of the network.Our NAS-based enhancement network inherits the widely used U-Net architecture, which consists of two components: encoder and decoder. Different from the previous U-Net architectures, the original residual structures or convolution blocks are replaced by the proposed NAS blocks so that the network can automatically select the most suitable operators and learn robust and reliable features for image enhancement. The below Figure shows the proposed NAS-based framework. Given the low-quality image, its two color spaces, namely RGB and Lab images, are fed into the proposed network. In the encoder, multi-level features are extracted by using downsampling operation and different receptive fields. Then, these features are further upsampled and fused to recover the final enhanced image in the decoder.

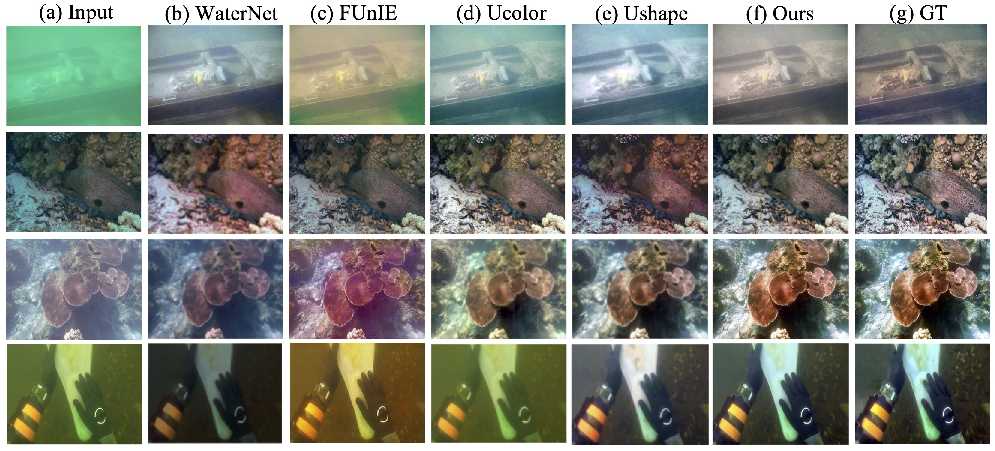

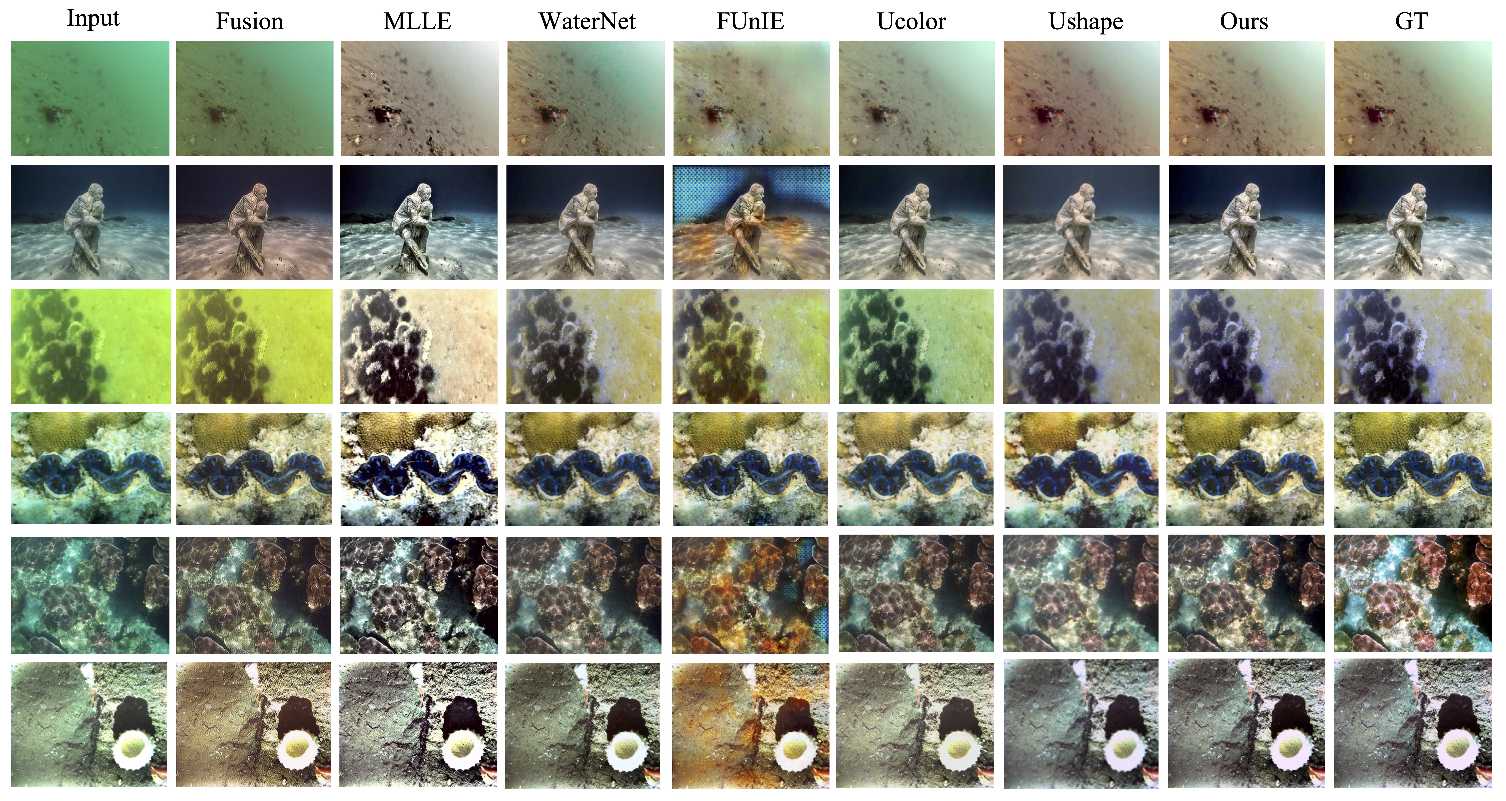

The below Figure exhibits the visual comparison between the proposed method and the state-of-the-arts on underwater scenes. The shipwreck (the first row) demonstrates that the underwater images may suffer from multiply noises, such as color distortion, blurring, splotchy textures, etc. The previous approaches are able to eliminate some noise and recover the original content to some extent. However, their enhanced images still exist respective drawbacks. For example, FUnlE removes major distorted colors, but the left bottom and right bottom corner still exist the irradicable noise region. WaterNet and Ushape can recover the content and texture of the original image, but the color style of the entire image is changed. Compared with their enhanced images, our result not only restores the image content but also retains the color style as much as possible.

Resources

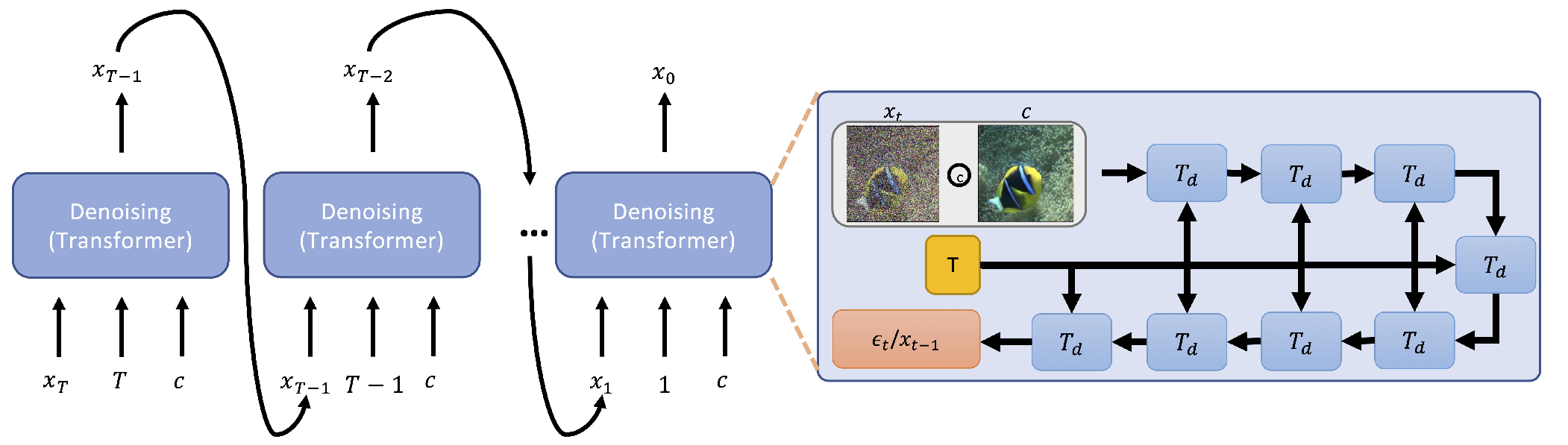

Data: GoogleDrive(pre-trained model) Publications

Underwater Image Enhancement by Transformer-based Diffusion Model with Non-uniform Sampling for Skip StrategyIn this paper, we present an approach to image enhancement with diffusion model in underwater scenes. Our method adapts conditional denoising diffusion probabilistic models to generate the corresponding enhanced images by using the underwater images as the input. Additionally, in order to improve the efficiency of the reverse process in the diffusion model, we adopt two different ways. We firstly propose a lightweight transformer-based denoising network, which can effectively promote the time of network forward per iteration. On the other hand, we introduce a skip sampling strategy to reduce the number of iterations. Besides, based on the skip sampling strategy, we propose two different non-uniform sampling methods for the sequence of the time step, namely piecewise sampling and searching with evolutionary algorithm. Both of them are effective and can further improve performance by using the same steps against the previous uniform sampling. In the end, we conduct a relative evaluation of the widely used underwater enhancement datasets between the recent state-of-the-art methods and the proposed approach. The experimental results prove that our approach can achieve both competitive performance and high efficiency.As shown in the Figure, our framework mainly contains the diffusion process, transformer-based denoising network and channel-wise attention module. Our purpose is to generate the corresponding enhanced image by giving a low-quality image. It can be satisfied by using the original diffusion model with indeterminate results. Therefore, we introduce the conditional diffusion model. Given the input, namely a noisy image, a conditional image, and the time step, the network is used to estimate the noisy distribution. During the training process, we use L1 loss to optimize the network.

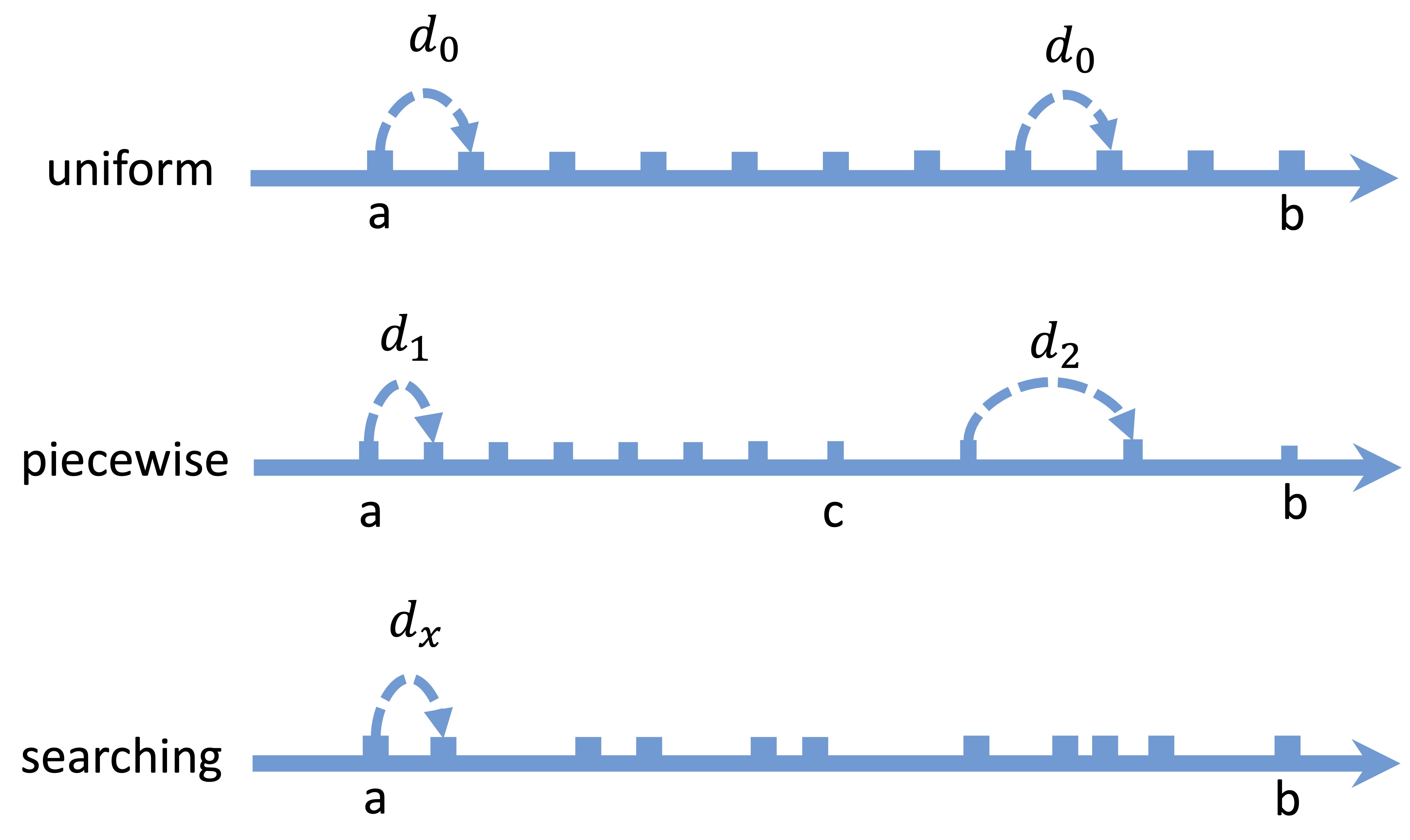

Previous methods usually exploit a uniform sampling way with a fixed stride. It is effective but not flexible. Besides, we observe that the former part of the reverse process is more important than the latter part. Therefore, we propose a piecewise sampling method, which is to employ different sampling strides in the time step sequence. Also, we propose a search method to find out the optimal sampling sequence with evolutionaly algorithm. The details are shown as below:

The below figure presents the visual comparison between the proposed methods and the other state-of-the-art approaches. Notice that the ability of color correction by our approach is outstanding. For example, Ucolor can remove the green noise in the underwater image to some extent, but its enhanced image cannot restore the original object's color. A similar phenomenon also appears in the enhanced images by Ushape. The reddish color is generated by Ushape in the enhanced image of the second row, whereas no such effect in ours. From the visual inspection compared with the previous methods, it is confirmed that the proposed method has achieved competitive performance in color correction and restoration.

Resources

Data: GoogleDrive(pre-trained model) Publications

|

| Computer Vision and Graphics Laboratory |