|

Marker-less facial motion capture system. |

|

The facial animation and capturing the motion is one of the important topics in the area of computer vision and graphics.

the marker-based approach are required the time to set up the marker

and it is difficult to use for capturing the scene that the object has to be in contact with the face.

Then, we classify the facial parts into five types: nose, mouth, eye, cheek and obstacle.

And we define the feature vector of each point of the face by using Fast Point Feature Histograms.

The proposed method consists of three part:(1)Facial parts recognition, (2)Non-rigid deformation tracking, (3)Key point refinement.

(1) Facial parts recognition,

We define the facial parts as follows: mouth, nose, eyes, cheek and noise.

We recognize the facial parts by using Random Forest algorithm

to be simplified to solve the registration problem.

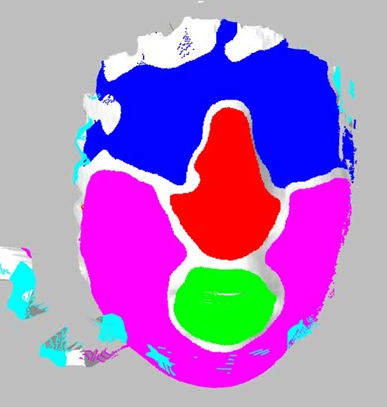

The result of the facial parts recognition is as follows:

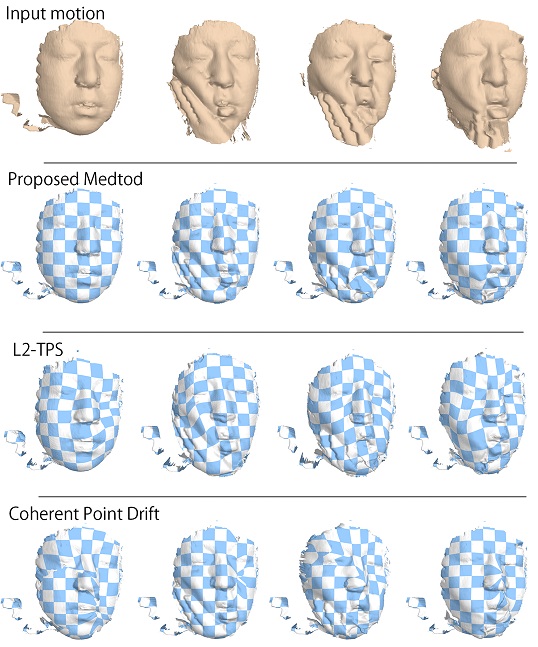

(2) Non-rigid deformation tracking,

We calculate the transformation of the each point from the point set belongs to first frame to it belongs to other frame by using the L2-TPS algorithm.

After that, we integrate the transformation of the each point to the small number of the key points to represent the deformation of the face.

We use a FFD method which uses the RBF as the basis function.

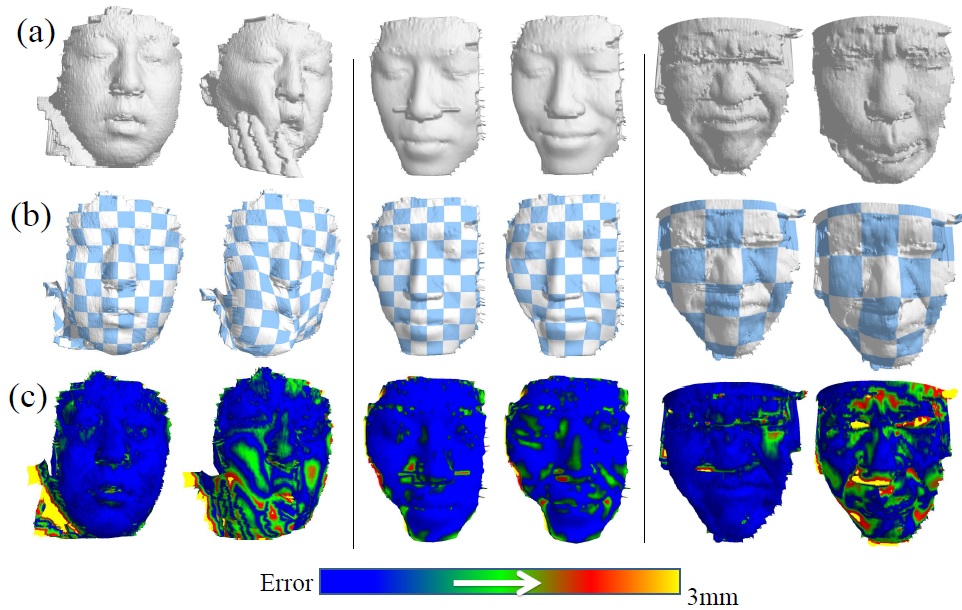

The proposed method succeeded to detect the movement of a face as the deformation from the initial shape to the other shapes.

The values of tracking errors are under 2mm.

(3) Key point refinement

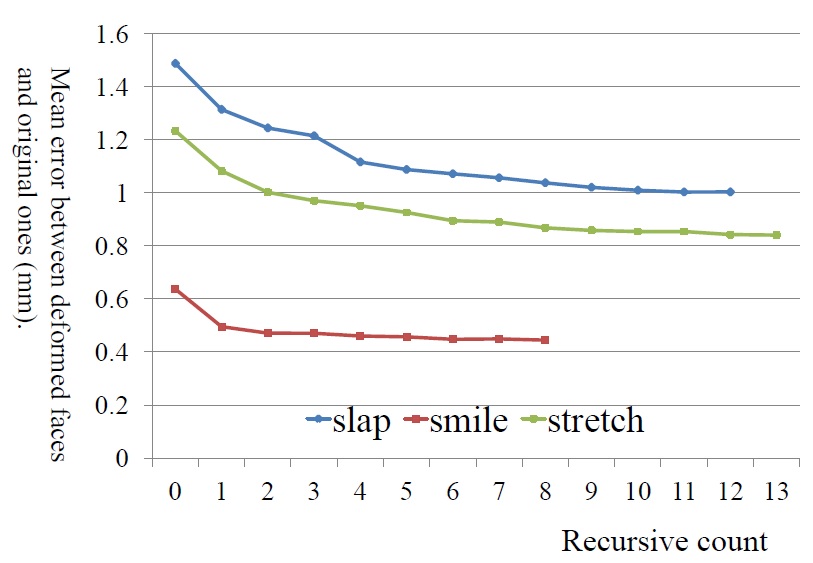

we propose a method to detect the key points which efficiently represent motions of a face.

We optimize the key points for a Radial Basis Function (RBF) based 3D deformation technique.

The RBF based deformation is a common technique to represent a movement of 3D objects in CG animations.

Since the key point based approaches usually deform objects by interpolating movements of the key points,

these approaches cause errors between the deformed shapes and the original ones.

By utilizing the face space deformation, the proposed method can define the suitable pairs of key points to minimize the errors.

In our results, we show that the mean errors between the original motions and tracked motions can reduce less than 1.0mm in all three types of facial motions.

Publications

|

| Kawasaki Laboratory |